Splunk App for Data Science and Deep Learning overview

The Splunk App for Data Science and Deep Learning (DSDL) is a free app you can download from Splunkbase.

The Splunk App for Data Science and Deep Learning (DSDL) extends the Splunk platform to provide advanced analytics, machine learning, and deep learning, by leveraging external containerized environments and popular data science frameworks.

DSDL supports prebuilt Docker containers that ship with libraries including TensorFlow, PyTorch, NLP, and classical machine learning tools. These containers allow for more resource-intensive tasks to run externally, freeing your Splunk search head from heavy computational loads.

DSDL supports building, training, and operationalizing models with or without GPU acceleration. DSDL also preserves familiar Splunk Machine Learning Toolkit (MLTK) commands, making the end-to-end model development process cohesive and intuitive, all while integrating into your existing Splunk workflows.

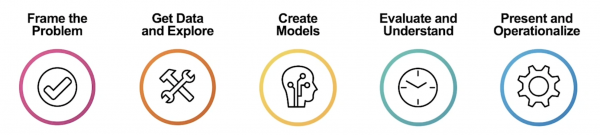

The following image shows a high-level workflow you can follow when using DSDL:

The Splunk App for Data Science and Deep Learning is not a default solution, but a way to create custom machine learning models. It's helpful to have domain knowledge, Splunk Search Processing Language (SPL) knowledge, Splunk platform experience, Splunk Machine Learning Toolkit (MLTK) experience, and Python and Jupyter Lab Notebook experience when using DSDL.

Splunk App for Data Science and Deep Learning features

DSDL can enhance your data analytics capabilities in the following ways:

- Empowering advanced analytics: Access advanced data science, machine learning, and deep learning tools within the Splunk environment.

- Optimizing resources: Offload computationally intensive tasks to external computing resources to prevent overburdening your Splunk infrastructure performance when completing other critical tasks. Choose computing resources that best fit your needs, whether on-premises servers, cloud-based solutions, or specialized hardware.

- Streamlining workflows: Integrate data ingestion, model development, training, and deployment within a single, unified platform, reducing the complexity of managing multiple tools.

- Facilitating collaboration: Collaborate effectively by sharing models, code, and insights within your team using integrated tools like Jupyter notebooks and MLflow. Empower data scientists and analysts to work together more effectively with shared tools and environments.

- Enhancing scalability: Easily scale computational resources to handle growing data volumes and more complex models.

- Enhancing observability: Monitor and troubleshoot your models and infrastructure using Splunk Observability tools, ensuring reliability and optimal performance.

Requirements for the Splunk App for Data Science and Deep Learning

In order to successfully run the Splunk App for Data Science and Deep Learning, the following is required:

- Splunk Enterprise 8.2.x or higher, or Splunk Cloud Platform.

- Installation of the correct version of the Python for Scientific Computing (PSC) add-on from Splunkbase.

- Mac OS environment.

- Windows 64-bit environment.

- Linux 64-bit environment.

- Installation of the Splunk Machine Learning Toolkit app from Splunkbase.

- An internet connected container environment:

- Docker: Set up a straightforward environment, typically without Transport Layer Security (TLS), for smaller or development use cases.

- Kubernetes: Orchestrate larger-scale environments using TLS-enabled Kubernetes clusters. For example, Amazon EKS or Red Hat OpenShift. This option provides secure, scalable deployment of containers.

Using predefined workflows

DSDL provides predefined JupyterLab Notebooks for building, testing, and operationalizing models within the Splunk ecosystem. DSDL supports both CPU and GPU containers to address diverse performance needs. You can interact with your data in the following ways:

- Pulling data directly from Splunk into JupyterLab using the Splunk REST API for interactive exploration.

- Pushing data from Splunk searches into the container environment for structured model development using

mode=stage.

Splunk App for Data Science and Deep Learning roles

The Splunk App for Data Science and Deep Learning includes the following 2 roles:

| Role name | Description |

|---|---|

mltk_container_user

|

This role inherits from default Splunk platform user role and extends it with 2 capabilities:

|

mltk_container_admin

|

This role inherits capabilities from mltk_container_user and also has the following capability:

|

To learn more about managing Splunk platform users and roles, see Create and manage roles with Splunk Web in the Securing Splunk Enterprise manual.

Splunk App for Data Science and Deep Learning permissions

The Splunk App for Data Science and Deep Learning includes the following 3 permissions:

| Permission | Description |

|---|---|

configure_mltk_container

|

Ability to access the setup page and make configuration changes. |

list_mltk_container

|

Ability to list container models as visible on the container dashboard. |

control_mltk_container

|

Ability to start or stop containers. |

Splunk App for Data Science and Deep Learning restrictions

The DSDL model-building workflow includes processes that occur outside of the Splunk platform ecosystem, leveraging third-party infrastructure such as Docker, Kubernetes, OpenShift, and custom Python code defined in JupyterLab. Any third-party infrastructure processes are out of scope for Splunk platform support or troubleshooting.

See the following table for DSDL app limitations and restrictions:

| App limitation or restriction | Description |

|---|---|

| Docker, Kubernetes, and OpenShift environments | The architecture only supports Docker, Kubernetes, and OpenShift as target container environments. |

| No indexer distribution | Data is processed on the search head and sent to the container environment. Data cannot be processed in a distributed manner, such as streaming data in parallel from indexers to one or many containers. However, all advantages of search in a distributed Splunk platform deployment still exist. |

| Security protocols | Data is sent from a search head to a container over HTTPS protocol. Splunk administrators must take steps to secure the setup of DSDL and container environment accordingly. |

| Atomar container model | Models created using the Splunk App for Data Science and Deep Learning (DSDL) are atomar in that each model is served by one container. |

| Global model sharing | Models must be shared if they need to be served from a dedicated container. Set the model permission to Global. |

| Model naming convention | Model names must not include white spaces for model configuration to work properly. |

| How the Splunk App for Data Science and Deep Learning can help you |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.0, 5.2.1

Download manual

Download manual

Feedback submitted, thanks!